As customer demands have grown more complex, so too has the idea of what to do about the customer experience (CX), especially when it comes to digital experience strategy. It was never enough to scoreboard-watch numbers and react to situations only as they occurred in real-time; if you want to forge meaningful connections with customers while strengthening your bottom line, you need to constantly be aware of what drives their digital behavior. This is one of the first steps toward Experience Improvement (XI), and it’s something brands need to implement if they want to not only retain customers, but make a difference with them.

The following are three quick methods brands can leverage to learn what drives customers’ online behavior, enabling them to begin or continue a cycle of continuous improvement:

- Challenge Your Assumptions

- Know Your Drivers

- Leverage All Your Data

Method #1: Challenge Your Assumptions

This is an important step to take no matter how well you know your customers. Like we said earlier, CX expectations are changing, which means that it never hurts to reevaluate your brand journey through your customers’ eyes. So, with that goal in mind, create some surveys, interview your customers, and map out your current journey. You might be surprised what you learn!

Once you’ve got your customers’ current expectations in mind, leverage those to get to know your clientele better as people. Being personable is its own reward, but customers will always prefer an organization where everybody knows their name. Besides, better knowing the people who sustain your brand causes employees to become more invested in the mission and vision.

Method #2: Know Your Drivers

It’s always a good idea to take a hard look at your customers’ behaviors; especially the ones that seem to correlate with growth, retention, and finding the moments that matter. When you find those behaviors, you’ve found the things that have the largest impact on both customers’ interactions with your brand and your business as a whole.

Knowing what these behaviors are can provide a ton of intel and context on how to brush up your customer touchpoints, map new segments of your customer journey, and how to reach those individuals for new products and services that you know they’ll love. This ties into the notion of future-proofing, i.e., knowing what your customers may want before they themselves even know, a foresight that will make your brand even more competitive.

Method #3: Leverage All Your Data

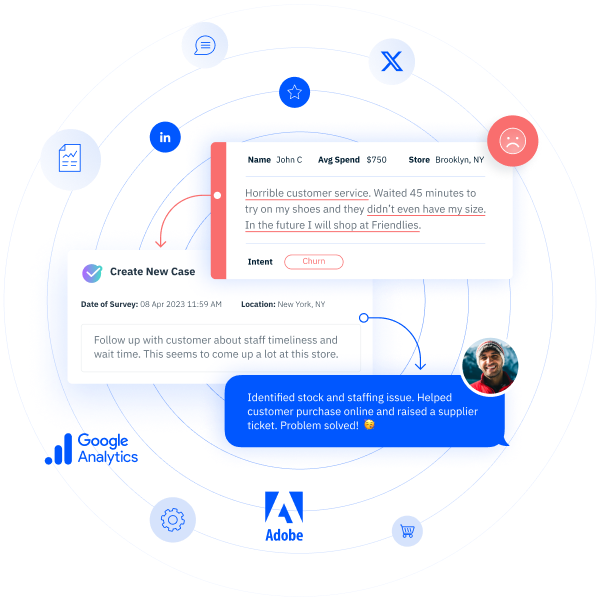

Knowing how your customers behave is great, but it’s only half the battle. The final step toward understanding what drives your customers’ digital activities is putting their behavior against a backdrop of other metrics. Financial data, operational information, and other contextual information belong in that backdrop. So too do sources like social, VoC, CRM data, and website/app data.

The Power of a Well-Executed Digital Experience Strategy

Pulling all of this information together can take time, especially if it’s siloed with multiple teams, but if you can pull it off, you’ll have a 360-degree view of your customer that goes beyond ‘just’ digital drivers. This holistic understanding allows your organization to not only build a hyper-accurate profile of your customer, but also unites your entire organization around it, enabling you to create meaningfully improved experiences that bring customers back, create a stronger bottom line, and boost your organization to the top of your vertical.

Looking to add to your digital experience strategy? Our latest eBook lays out four quick wins that will put some points on the board for you customer experience team in the best way possible! Check it out here.