Top 10 AI Terms Every CX Pro Should Know

Here are ten artificial intelligence (AI) terms every CX professional needs to know to keep up with the customer intelligence conversation.

There’s a lot of talk about Artificial Intelligence (AI) in CX these days, and while most CX pros won’t ever write an algorithm, understanding the fundamentals of analytics and AI is no longer an option — it’s a necessity. Following are the top 10 key AI terms every CX practitioner must know in order to keep up with the AI conversation:

1. Artificial Intelligence

Advanced software that automates the learning and performing of tasks that normally require human intelligence, such as learning, decision-making, speech and image recognition, and translations.

2. Analytics

The detailed examination of data as the basis for discussion or interpretation. There are a variety of types of analytics processes. Gartner breaks them out this way:

-

- Descriptive – Characterized by traditional business intelligence (BI) and visualizations such as pie charts, bar charts, line graphs, tables, or generated narratives.

“What happened?”

-

- Diagnostics – Characterized by techniques such as drill-down, data discovery, data mining and correlations.

“Why did it happen?”

-

- Predictive – Characterized by techniques such as regression analysis, forecasting, multivariate statistics, pattern matching, predictive modeling, and forecasting.

“What is going to happen?”

-

- Prescriptive – Characterized by techniques such as graph analysis, simulation, complex event processing, neural networks, recommendation engines, heuristics, and machine learning.

“What should be done?”

3. Machine Learning

This is a term sometimes used interchangeably with AI, but in fact, it’s one just one component, albeit an important one. Machine learning is computers the ability to learn a task or function without being explicitly programmed. There are several different types of machine learning, including:

- Supervised – The task or function is learned from labeled training data, most often curated by a human. The algorithm adapts to new situations by generalizing from the training data to act in a “reasonable” way.

- Unsupervised – The task or function is learned from hidden structures in “unlabeled” data. Because the dataset is unlabeled, there is no evaluation of the accuracy for the outputted model.

- Neural Networks – A number of processors operating in parallel and arranged in tiers. The first tier receives the raw data, and then passes on its “knowledge” to each successive tier.

- Deep Learning – a machine learning model that leverages hierarchical representation of the data. This is best illustrated when used with Neural Networks.

4. Algorithms

A set of rules or process to be performed in calculations, especially by a computer.

5. Models

Algorithms that analyze and visualize data through a specific lens. Some notable types of models include:

- Anomaly – Recognition of a significant change in the data being monitored. (E.g. an increase in frequency of a particular issue being discussed by customers.)

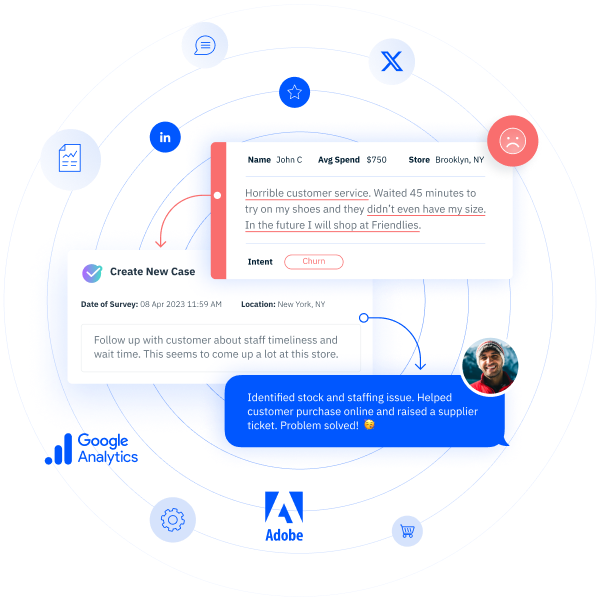

- Churn – The likelihood of loss, especially of a customer or employee leaving an organization.

- Recommendation – A set of instructions given that will improve a specific key metric.

- Financial Forecast – Using historical performance data, a prediction about future performance is made.

6. Speech Analysis

Recognition of customer tone, pitch, and volume to determine customer sentiment and emotion.

7. Automated Speech Transcription (Speech-to-Text)

Automated recognition of digitized speech wavelengths converted to text.

8. Facial Recognition

Machine learning applied to images to identify the key characteristics of human faces. Typical applications include identifying a particular person (to unlock a device) or the emotions they may be expressing (a customer is unhappy).

9. Text Analytics

Identification of valuable concepts from human-created text data (social reviews, survey comments)

- Natural Language Processing (NLP) – The application of computer processing to deriving patterns and meaning from large sets of text-based data. There are two types of NLP, rule-based and machine learning. With rule-based, human curation plays a role in creating and refining dictionaries, rules and patterns, which are then coded into the to the computer. With machine learning, the computer learns from existing data sets and then automatically generates the rules that drive the analysis of the text data.

- Natural Language Generation (NLG) – The automated creation of text data using computational linguistics to streamline the interaction between humans and computers. Siri and Alexa are examples of NLG.

10. Internet of Things

The interconnection via the internet of everyday computing devices enabling them to send and receive data. For example, the Bluetooth connection between your phone and car.

To read more about InMoment’s advanced analytics and the intelligence they provide, click here!