Placement of Survey Questions

This is an article written by MaritzCX in which the nature of survey questions are examined and connections to business results are illustrated.

The placement of certain key survey questions – particularly the overall satisfaction question in a customer satisfaction questionnaire – has been extensively debated among academics, suppliers, and clients.

The point of view of MaritzCX is outlined below, results from discussion in our Research Leadership Council sessions, our Marketing Sciences Department, a review of relevant academic literature, and a limited amount of side-by-side testing.

Importantly, no overwhelming body of evidence indicates whether the key metrics in a survey, particularly questions like the overall satisfaction question, should come first (before the attributes) or last (after the attributes). Some studies have shown that the overall-last design produces higher relationships (R-squared) between overall satisfaction and the attributes, presumably because the preceding attributes influence the overall satisfaction measure through context effects. In fact, some suppliers recommend this “overall last” design for just this reason.

MaritzCX has the opposite point of view: We recommend the overall-first design to achieve the least-biased, best estimate of the real level of satisfaction that exists among a company’s customers.

Here is the rationale:

- The goal of marketing research is to interview a sample of people in order to understand the entire universe of those people; for example, interviewing a sample of customers to represent all of the company’s (un-surveyed) customers. The goal is not to change customers’ perceptions as a result of having participated in the survey.

- In any survey design, context effects from prior questions are unavoidable. The best survey designs eliminate or at least minimize context effects on the most important variables in the study. In general, the most important questions appear earlier in the questionnaire, thus minimizing respondent fatigue and bias from prior questions.

- In customer satisfaction research, overall satisfaction is usually the most important measure in the study, the one on which compensation and other performance awards are based. Therefore, it should be sheltered as much as possible from context effects in the design i.e., placed early in the questionnaire.

- If the overall-last design produces a higher R-squared or “driver” relationship between the attributes and the overall rating, this typically means that the overall rating is being impacted or changed by the preceding attributes. (Otherwise, there would be no difference between the two designs). Therefore, modifying the attribute battery could single-handedly produce a change in the overall satisfaction rating. Obviously, this is extremely problematic for a tracking study, in which attributes commonly change between the benchmark and rollout waves, or from year to year as company operational priorities change. Asking the overall satisfaction question first will allow clients to change the attribute battery at any time without this worry.

- In an overall-last design, if the satisfaction rating is changed by preceding attributes, it may not have the same linkage to downstream customer behaviors (e.g., loyalty, advocacy) and/or business results that exists in the true customer universe. Thus, any modeling analyses undertaken could be mis-specified.

For these reasons, asking the overall question before the attributes appears to be the best under either scenario: If there is no context effect, then overall-first makes sense because it is less subject to respondent fatigue. If there is a context effect, then the overall-first design creates the least-biased, most stable and useful measure of overall satisfaction.

The preceding discussion applies to new studies, with no need to match prior historical data. For an existing study with an overall-last structure already in place, any potential advantages in switching to an overall-first design could be outweighed by the need to track historical trends as accurately as possible.

For more information about this article, click here.

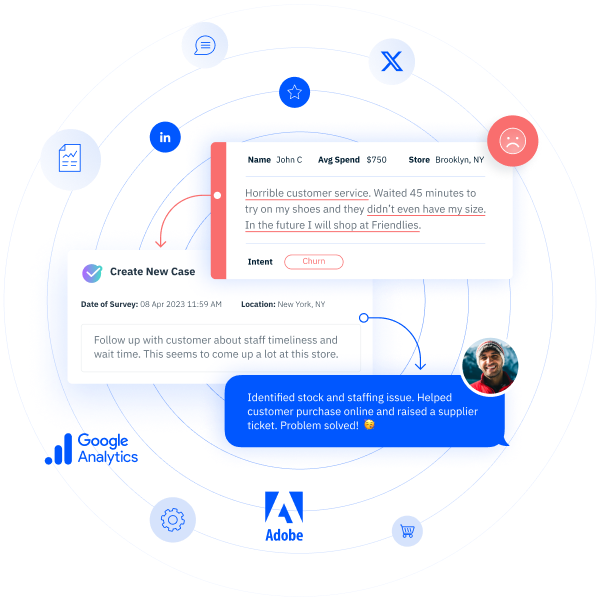

MaritzCX believes organizations should be able to see, sense and act on the experiences and desires of every customer, at every touch point, as it happens. We help organizations increase customer retention, conversion and lifetime value by ingraining customer experience intelligence and action systems into the DNA of business operations. For more information, visit www.maritzcx.com.