Earlier this year I shifted my priorities and focus, and chose pleasure and purpose over other engrained habits and needs.

I was so “busy being busy” that I had forgotten the evident importance of slowing down and taking stock. The basic need to actively listen and properly connect with the various voices and opinions available to me had been neglected. As someone who delights in identifying patterns, sharing theories, and having an opinion, I had found myself too often recycling old narratives. I was running the risk of becoming stale or too comfortable with my long established talk track.

I needed to set some new goals, and decided to set deadlines that would force me to both focus on freeing up time to learn, and also create the right environment to get energised, so I decided to create regular “industry sessions” for my colleagues, where we could discuss the hot topics of the day.

Out of this year’s sessions came some very intriguing stories and lessons from the customer experience (CX) industry. Here are just a few that I’d like to share with you.

Personalisation

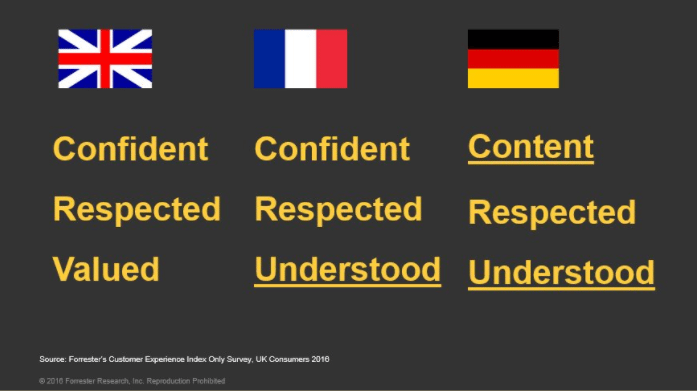

Often seen in relation to targeted marketing, we as consumers have appreciated brands’ efforts to personalise our experience. And brands know that establishing a connection (friendliness, trust, being made to feel valued) drives customer satisfaction, loyalty, and increased spend.

But being “personal” has also created some opportunities to strengthen important links, even if not always executed perfectly. Starbucks—as a global brand—is the antithesis of local, and yet they tapped in to the importance of being valued in a unique way through the “Can I have your name?” approach. At first disruptive and peculiar, it is now hard for other brands to copy.

My local train station has a Starbucks franchise, and whenever I approach the counter they know I want a flat white. Unfortunately, despite having tried on a number of occasions to tell Eddie that my name is not Matt, that is the name that appears on the cup. Despite being wrong, my “Britishness” can only allow it roll on now, and secretly I enjoy the regularity of this wrong. It is human and therefore wonderfully imperfect, and in many ways more effective than communication based on algorithms.

Emerging Labels And Transparency

We have perhaps already grown a little weary of contemplating millennials, and are now seeing more articles hypothesising on Gen Z (“the hyper millennials”), and what they will bring to the party. How different will their customer expectations be to us Generation X-ers? Are they really that much more sensible?

A requirement for authenticity, and brands doing the right thing may however be the needs that bond us all together, less XYZ and more Generation C (more connected to each other through social reviews than ever before). In the space of a few months two hip brands saw the polar effects of how quickly word of mouth can kick in.

Airrbnb was impacted by the news that customers who had left part way through their stays were seeing their reviews cleansed as they were being treated as having been cancelled. Their spokesperson described these as “isolated incidents.” In contrast, Patagonia’s promise to donate all of its Black Friday sales to local environmental causes not only swelled their tills, but boosted awareness and equity as the positive word spread.

Language Shifts And Meaning

Back in April we were debating FOMO (fear of missing out) and how brands use this emotion to drive increased traffic—and paranoia—amongst their competitors. I doubt many of us saw, however, “post truth” (apparently the Oxford Dictionary’s word of the year) coming up the rails.

The definition of post truth is, “Relating to or denoting circumstances in which objective facts are less influential in shaping public opinion than appeals to emotion and personal belief.”

When the Temkin Group labeled 2016 as the year of emotion, I doubt they expected System 2 parts of our brain (slow, effortful, infrequent, logical, calculating, conscious) to be so heavily defeated by System 1 (fast, automatic, frequent, emotional, stereotypic, subconscious).

I should probably out myself here as a Guardian-reading, Radio-6-listening type, but I was certainly not the only person to view the recent UK and USA votes in a state of disbelief. Out of this darkness the team was at least able to properly understand two things:

1. Negativity Bias: Humans are significantly more likely to remember the negative experiences, and far more people pass on a bad experience than a good one—so reduce the chances as well as you can, and don’t under estimate how motivating anger can be.

2. Confirmation Bias and our manufactured echo chambers: This is a description of the situation in which information, ideas, or beliefs are amplified or reinforced by transmission and repetition inside an “enclosed” system, where different or competing views are censored, disallowed, or otherwise underrepresented. The moral being to listen to your electorate/ customers / colleagues, and don’t ignore feedback that simply does not match your own take on the world. It could well come back to bite you.

Story Telling And Journeys In Experience

Finally, something that we have further built on is our love of stories, a recognition of their importance, and how best to narrate ideas to connect with an audience.

For example, we have agreed on the right structure for delivering meaningful communication. Set up the situation that we, or our customers, find themselves in; share the catalyst that requires a change; explain the purpose and the central question that you will be answering; give your answer; provide the evidence; summarise and provide the call to arms.

Part of the reason that story structures work so well to get a message across is that this is how our brains have evolved to take in important messages. And looking at experiences from a behavioural science perspective also provides learning for how to structure any interaction for greatest effect. Get the difficult things out of the way early (but try not to churn), spread the pleasure, and end on a high.

We can all benefit from taking care of our opportunities to communicate.

Before I conclude, for all those commentators out there who take the time to share and contribute to the mix of opinions and learning available to those willing to listen, I thank you.

We should all continuously remind ourselves that CX does not exist in a bubble. Where my team originally started looking at more CX-specific emerging topics, such as a focus on customer effort metrics, we soon felt diverted and started to explore the outer reaches of behaviour and motivation in general. And this is because we recognised that many factors can influence a brand’s ability to deliver against its customer promise, its employees’ capacity to deliver this proposition consistently, and indeed its customers’ appetite to appreciate and be motivated by these efforts.

My resolution for 2017 will be to stay curious, but contribute more. I will therefore leave you with a message that resonated with me this summer. It wasn’t from the usual sages, but the English RFU as part of their Level 1 Coaching course. Whilst aimed at how we work with young rugby players, and their development, the argument works for all of us who are in a position of influence:

“Our players have the capacity to outgrow us if we stand still. We may restrict them from achieving their full potential if we fail to recognise the need to continue our own development.”