In the world of customer experience, surveys have been a reliable feedback-collecting source for decades. As we make our way forward with new CX technologies and approaches, survey fatigue remains a key operational concern. CX professionals are finding it more challenging than ever to keep program momentum alive. Today, I’m going to share some tips for reviewing your survey program for better response rates, higher program engagement, and better representative results. Use these tips to deliver excellent experiences for your customers while demonstrating that their voice is being heard!

The Road to Alleviate Survey Fatigue

As our approaches to customer experience advance, so does the challenge of survey fatigue. This is a hurdle that CX professionals confront head-on as they strive to maintain the momentum of their survey programs. In the following sections, I will delve into strategies that not only increase your response rate but also invigorate program engagement and yield more representative results. By implementing these techniques, you’ll not only provide outstanding customer experiences but also emphasize that their feedback is not just heard, but genuinely valued.

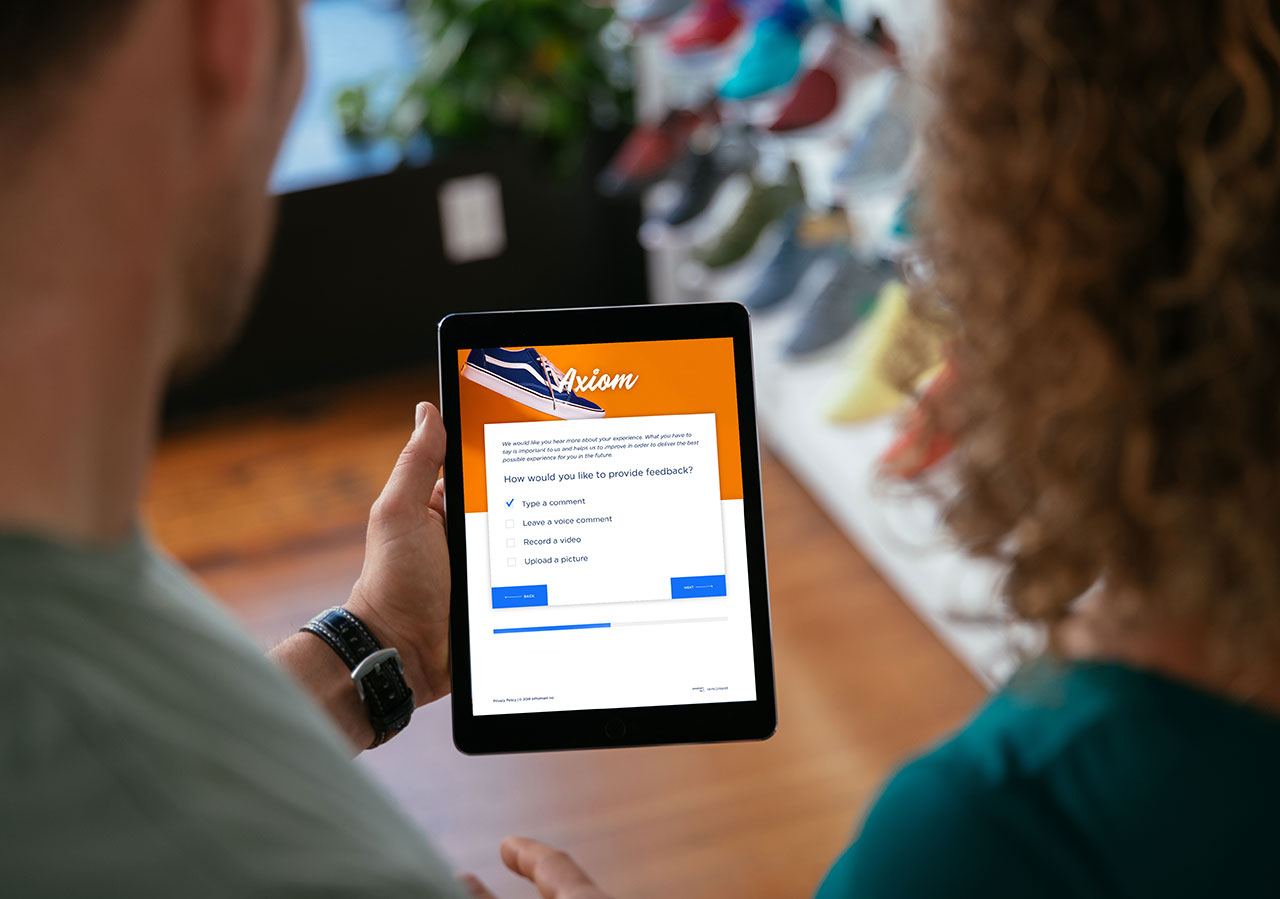

#1. Make Surveys Shorter. A LOT Shorter.

How many times have you called a customer service representative and thought, “I am your customer—you should already know all these details about me.” Well, people are potentially thinking this about your surveys, too. Ideally, experience surveys should take 2-4 minutes to complete, which can be easily achieved by cutting out the questions to which you already know the answers. You can start your journey to beating survey fatigue with shortening surveys further by removing surplus demographic or operational data that could be sourced from your CRM or data lake (e.g. age, products held, customer tenure), and ultimately improved response rates.

Another technique to fight survey fatigue that is successful for many brands is to leverage microsurveys for mobile and other digital environments. A survey can be set up at each key digital touchpoint (like on a mobile app or website) to send a one or two question microsurvey with an open text box to capture immediate, in-the-moment responses from customers.

Learn how Hootsuite tripled their Net Promoter Score by using InMoment’s microsurveys!

#2. Ask Survey Questions That Drive Action.

Whilst “good” survey questions vary from industry to industry, there are some overarching considerations that you need to keep in mind when drafting customer survey questions:

- Make sure each survey question has an owner within your organisation;

- Consider the type of action that can be taken within your organisation from this question

- Minimise words used in your questions. If the idea is clear without excess words, trim down wherever possible

- Confirm each survey question is either aligned to customer experience goals and / or targets (e.g. expected front line behaviour or a KPI).

By keeping each of these principles in mind, you’ll ensure that each question can drive action within your organisation, which could in turn be used in comms to demonstrate you’ve listened to customer feedback and taken action to drive an improved customer experience!

Want to see what a survey that drives action looks like? Learn how Pizza Hut UK partnered with InMoment to optimise survey design which resulted in double the average number of survey responses. Read the full Pizza Hut customer story today!

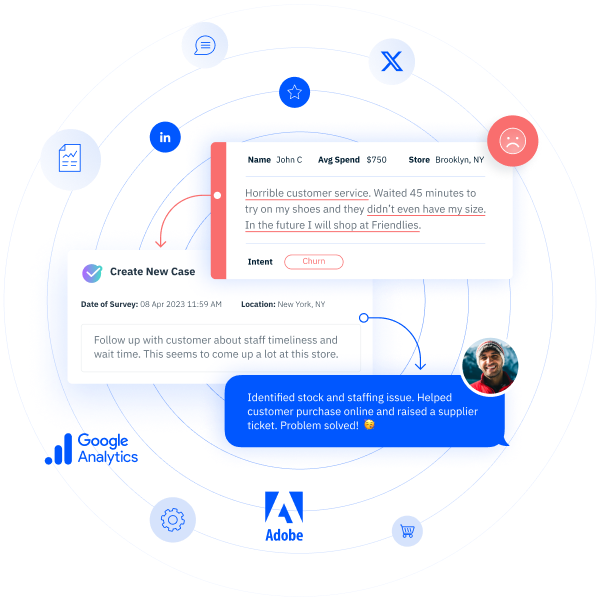

#3. Make Your Surveys Count: Pull Transactional and Journey Surveys Into Your Case Management Program

Surveys can be seen as the starting point of a customer conversation. Case management programs—also known as closed loop feedback (CLF) programs—enable trained staff to connect with customers one on one. Frontline staff call back customers to understand why an experience was either great or has room for improvement, and provide a chance to really connect with customers and hear their stories first hand. This can help drive continuous improvement initiatives, or provide customer-driven evidence to support larger initiatives that may require a business-case. Further, and if conducted with a treatment / control approach (e.g. 50% of CLF qualifying customers receive a call), you can track how customers’ behaviour has changed after you close the loop.

Don’t underestimate the potential positive brand impact you’ll see when customers receive a call from a representative after clicking “submit” on their survey. By optimising case management, it will give your program the opportunity to evolve outside of analytics, and start directly contributing more to other operational areas of the business.

In this world where we can reach customers in so many different ways, asking customers “how would you rate XYZ”, “why did you rate XYZ”, and “thinking over these elements, how would you rate…” can be boring, let’s be honest, especially if it is a long survey. Instead, we encourage you to make your surveys shorter to fight survey fatigue and look beyond the questions to discover how the customer’s voice can influence your organisation’s operational performance through CLF and actionable insights.

To learn more about what makes a great survey and how to combat survey fatigue, schedule a demo today!